Hello Tuners,

Welcome to this year's first edition, and we start strong💪🏼! DeepSeek-V3, with 671B parameters, pushes open-source AI to rival closed-source models, excelling in Chinese and math tasks. Nvidia’s acquisition of Run:ai introduces open-source GPU orchestration, fostering community growth.

OpenAI transitions to a Public Benefit Corporation to balance profit and mission amid concerns over AI safety during the “For-Profit” Shift. a16z invests in Carecode, automating healthcare processes in Brazil to reshape the industry.

Chinese AI startup DeepSeek has unveiled DeepSeek-V3, a groundbreaking open-source model with 671B parameters. Designed for efficiency and performance, it surpasses top open-source models like Llama-3.1 and rivals closed-source alternatives. With innovative features and cost-efficient training, DeepSeek-V3 redefines the potential of open-source AI, pushing the industry closer to AGI capabilities.

Parameters: 671B using a mixture-of-experts (MoE) architecture, activating 37B parameters per token.

Innovations: Multi-token prediction (MTP) and multi-head latent attention (MLA) for efficiency.

Context Length: Extended to 128K via a two-stage training process.

Training Dataset: 14.8T high-quality tokens.

Efficiency: Training completed in 2788K H800 GPU hours at $5.57M.

Benchmark Performance: Outperforms Llama-3.1-405B and rivals GPT-4o.

Specialized Strengths: Leads in Chinese and math-centric tasks, scoring 90.2 on Math-500.

However, one interesting thing noted by the users is that DeepSeek V3 often identifies as ChatGPT instead of its name, hinting at potential contamination from GPT-4 training data.

Nvidia has finally acquired Run:ai, a company that helps organizations orchestrate GPU clouds for AI, reportedly for $700 million. However, the deal doesn’t seem to come with any significant strings attached — unless you count the open-sourcing of Run:ai’s software. So why open-source? Well, let’s be honest: Nvidia’s market cap has ballooned to a staggering $3.56 trillion, attracting scrutiny from antitrust regulators. Maybe open-sourcing is Nvidia’s way of saying, "We’re too big to swallow whole, so let’s share the wealth."

Meanwhile, Run:ai founders Omri Geller and Ronen Dar have stated that open-sourcing will help the community “build better AI faster.” We’re inclined to believe they’re just trying to be good citizens of the AI world. After all, who wouldn’t want more flexibility, efficiency, and utilization for their GPU clouds? Who needs to dominate the GPU orchestration space when you can let everyone have a slice of the pie, especially when most people will still rely on Nvidia GPUs to enjoy that pie?

OpenAI is transitioning to a Delaware Public Benefit Corporation (PBC) to help balance the interests of shareholders, stakeholders, and the public. The shift aims to enable better funding and governance while maintaining the company’s mission of ensuring AGI benefits humanity. However, concerns persist about whether this move might prioritize profits at the expense of its original nonprofit goals, particularly in areas like AI safety and ethical policy development.

Critics like Elon Musk and Meta argue that the PBC structure could reduce transparency and accountability, allowing OpenAI to act more like a conventional for-profit entity. OpenAI must ensure that its nonprofit component continues to lead on critical initiatives such as safety, ethics, and policy and that the PBC’s operations remain aligned with its broader mission of benefiting humanity.

Brazilian startup Carecode, founded by Dr. Consulta’s Thomaz Srougi, uses AI to cut healthcare costs by automating appointment scheduling and call center tasks. Backed by $4.3M in pre-seed funding from a16z and QED, its AI agents work via WhatsApp and cater to Brazil’s low-income and older populations.

By focusing on healthcare’s $100B administrative market, Carecode offers an efficient and localized vertical approach. While healthcare is the priority, plans include expanding into insurance and payments to redefine Brazil’s healthcare tech landscape.

Weekly Research Spotlight 🔍

Large Concept Models: Language Modeling in a Sentence Representation Space

In this paper by Meta, researchers introduce the Large Concept Model (LCM), which moves beyond token-level processing to focus on higher-level semantic representations. Using the SONAR embedding space—capable of handling 200+ languages in text and speech—LCM is trained for autoregressive sentence prediction. Multiple methods, including MSE regression and diffusion-based generation, are explored. The results show strong zero-shot generalization, outperforming existing LLMs of similar size. The code is freely available, encouraging further development.

Meta’s paper introduces the Large Concept Model (LCM) to capture higher-level semantic representations. LCM uses SONAR’s 200+ language support to explore methods like MSE regression and diffusion-based generation. Achieving strong zero-shot generalization, LCM outperforms existing LLMs, with code freely available for further research.

LLM Of The Week

DRT-o1

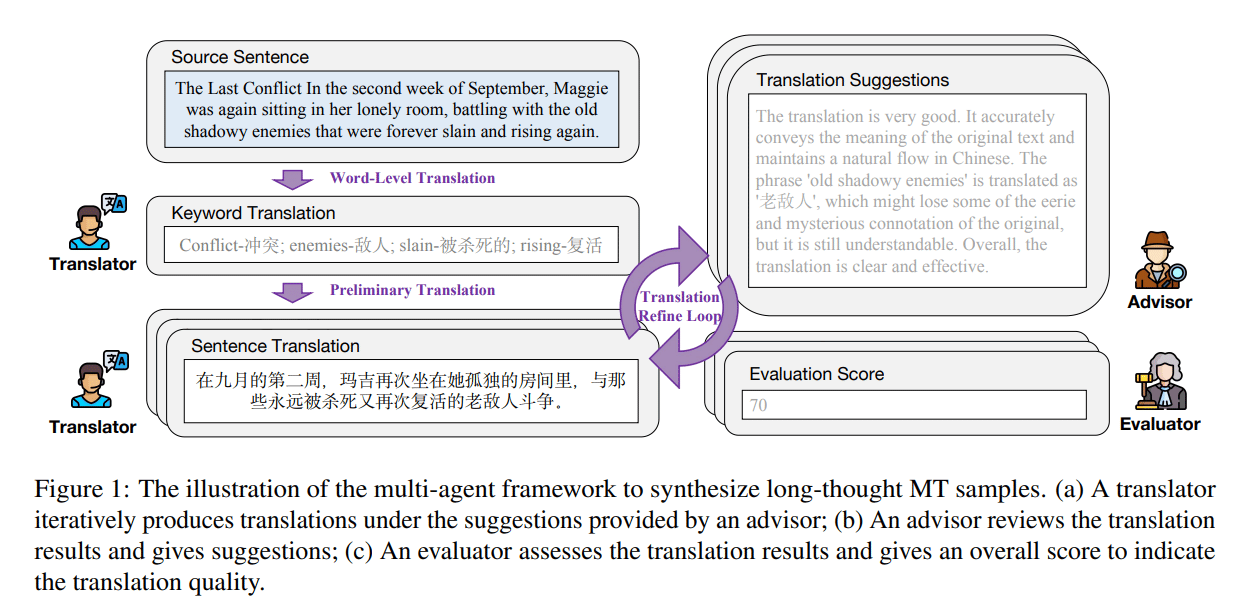

O1-like models have shown strong performance in reasoning tasks like math and coding. In this paper, DRT-o1 introduces an approach to apply long chain-of-thought (CoT) reasoning to neural machine translation (MT), specifically for texts containing similes and metaphors. Traditional literal translations often fail due to cultural differences, making semantic preservation difficult. DRT-o1 employs a multi-agent framework where translators refine translations under advisors' guidance. An evaluator ensures consistency in long thoughts throughout the process, generating tens of thousands of long-thought MT data. Using Qwen2.5 and LLaMA-3.1 as backbones, DRT-o1 models outperform both vanilla LLMs and existing O1-like models in handling culturally complex translations.

DRT-o1 successfully bridges human-like reasoning with machine translation, particularly for texts rich in cultural context. These models set new benchmarks in preserving semantics across languages by capturing the translator's thought process. The results highlight DRT-o1’s potential to enhance machine translation systems, offering improved accuracy in handling nuanced and culturally challenging texts.

Best Prompt of the Week 🎨

A surreal scene depicting a person drowning in the center of a glowing smartphone screen, with their hand reaching out for help. The smartphone emits ripples of light as if it were a pool of water. Surrounding the phone are floating translucent notifications and message windows, symbolizing digital overload. The entire composition is bathed in a moody green hue, giving it a sense of urgency and emotional intensity. The art style is detailed and atmospheric, blending realism with abstract elements.

Today's Goal: Try new things 🧪

Acting as a Career Planning Guide

Prompt: I want you to act as a goal-setting and manifestation guide. You will create a structured daily plan specifically designed to help an individual craft a compelling vision board for 2025 and implement actionable steps to bring it to life. You will identify key strategies for defining clear goals, developing visualization techniques, and aligning daily actions with their aspirations. Additionally, you will outline activities to maintain focus, cultivate a positive mindset, and track progress towards achieving their vision. My first suggestion request is: "I need help creating a daily activity plan for someone designing a vision board for 2025 and working to manifest their dreams into reality."

This Week’s Must-Watch Gem 💎