Hello Tuners,

Welcome to this edition of our tech newsletter, where the AI landscape is hotter than your favourite cup of coffee! Microsoft and Tsinghua University have cooked up the Differential Transformer, a model like noise-cancelling headphones for LLMs, helping them focus on the important stuff and ditch the distractions. Meanwhile, Nvidia has sneakily dropped its Llama-3.1-Nemotron-70B-Instruct model, outsmarting competitors like a chess grandmaster playing checkers.

Not to be outdone, Mistral AI is rolling out its tiny but mighty Minister 3B and 8B models, perfect for edge devices. Who doesn’t want AI that fits in their pocket? And let’s not forget Meta’s CoTracker3, which simplifies video tracking.

Microsoft Research and Tsinghua University have introduced the Differential Transformer (Diff Transformer) architecture, a promising innovation in large language models (LLMs). This new approach addresses the “lost-in-the-middle” phenomenon, where critical information is lost in lengthy contexts. Unlike traditional Transformers that use the softmax function to assign attention scores across all tokens, Diff Transformer employs a differential attention mechanism that filters out noise and enhances focus on relevant information, much like noise-cancelling headphones. Early results indicate that Diff Transformer significantly improves tasks like key information retrieval and reduces hallucinations.

The team has refined attention mechanisms, making the Diff Transformer more efficient. By splitting and comparing attention scores, the model operates faster while requiring fewer parameters and training tokens to match or exceed the performance of classic Transformers. Additionally, it is scalable, handling context lengths up to 64,000 tokens. This research is generating interest for real-world applications, particularly in retrieval-augmented generation (RAG), where context-aware responses are crucial. With future plans to explore multimodal data and larger models, the Diff Transformer could substantially upgrade LLMs.

Nvidia quietly rolled out a new AI model, Llama-3.1-Nemotron-70B-Instruct, outperforming industry giants like OpenAI and Anthropic. Dropping without much fanfare on Hugging Face, the model quickly grabbed attention with its stellar performance in benchmarks, beating out top models like GPT-4o and Claude 3.5 Sonnet. Nvidia’s shift from GPU dominance to developing cutting-edge AI software is straightforward, positioning it as a new powerhouse in large language models. The model’s ability to handle complex queries seamlessly and align closely with user preferences makes it a strong contender for businesses seeking more imaginative AI solutions.

This release follows Nvidia’s earlier AI push with its NVLM-D-72B multimodal model and signals its aggressive expansion into the AI space. Llama-3.1-Nemotron-70B-Instruct offers cost-efficient, highly flexible performance, ideal for enterprises across healthcare, finance, and other industries. While it still has limitations in specialized domains, its early success suggests Nvidia is now a serious competitor in the AI arms race (easy to do so given everyone else has been using their product to make money for a very long time), pushing other tech giants to rethink their strategies.

Mistral AI has launched two compact yet powerful models, Ministral 3B and 8B, designed to run AI directly on edge devices like smartphones and IoT hardware. These models outperform their larger counterparts, offering impressive performance on key benchmarks despite their smaller size. By enabling AI to operate locally, Mistral is moving away from cloud dependence, reducing latency, and addressing privacy concerns, which is particularly significant for industries like healthcare and manufacturing, where data security and real-time processing are critical.

This edge-first approach positions Mistral AI as a significant player in the growing demand for more sustainable and efficient AI solutions. Their hybrid business model, offering research access and commercial use, supports a broader developer ecosystem while challenging cloud-based giants. With the ability to run AI directly on devices, Mistral is paving the way for more flexible, decentralized AI solutions, potentially reshaping how businesses deploy AI technology across various sectors.

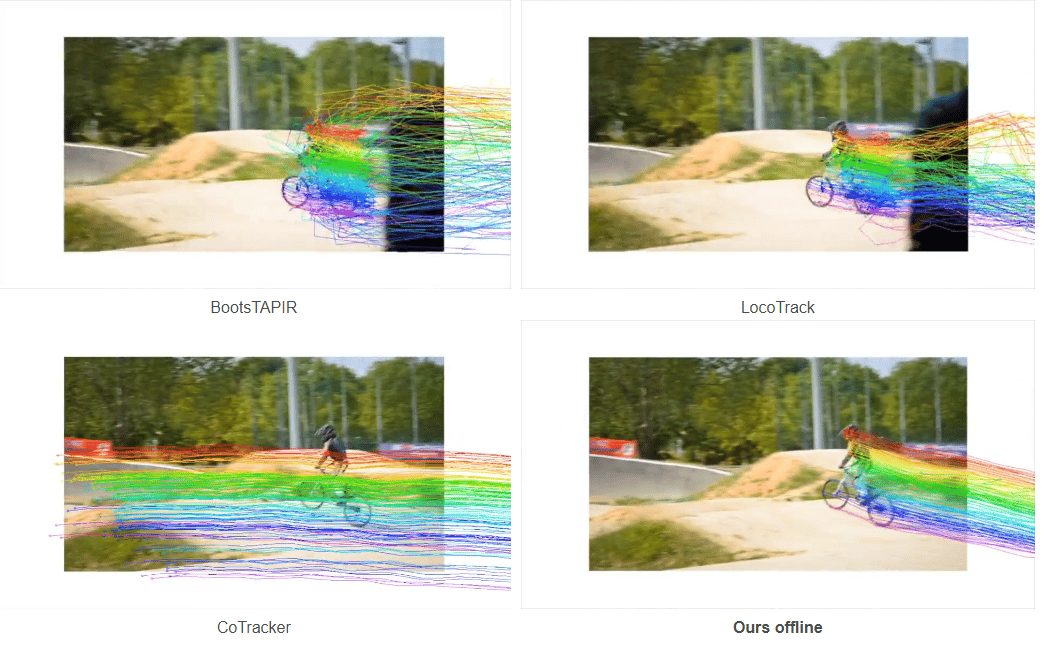

Meta's CoTracker3 introduces a simplified yet effective point-tracking model that leverages modern transformer architectures for state-of-the-art performance in natural video sequences. By employing a semi-supervised training approach that generates pseudo-labels from unannotated real videos, CoTracker3 effectively bridges the gap between synthetic and real-world data, achieving superior results with 1,000 times less training data than models like BootsTAPIR. Key components such as iterative updates, cross-track attention, and virtual tracks are retained from previous models. At the same time, unnecessary elements like the global matching stage are eliminated, resulting in a more efficient architecture that excels on benchmarks like TAP-Vid and Dynamic Replica.

CoTracker3’s flexibility allows for online and offline tracking, making it ideal for applications like 3D reconstruction and video editing where accurate point correspondences are crucial. Meta's scaling study demonstrates that CoTracker3 can effectively utilize accurate, unsupervised data to enhance performance, challenging the belief that larger datasets are always essential. CoTracker3 sets a new standard for efficient and precise point tracking in real-world video applications by simplifying its architecture and training methods.

Weekly Research Spotlight 🔍

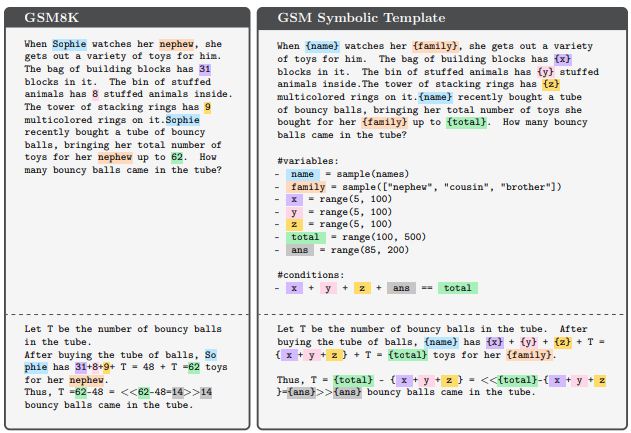

GSM-Symbolic

Apple has unveiled GSM-Symbolic, a refined benchmark extending the widely used GSM8K dataset's capabilities to better evaluate the mathematical reasoning abilities of Large Language Models (LLMs). While LLMs have shown substantial improvements in their performance on GSM8K, questions remain regarding the authenticity of these advancements. To address this, GSM-Symbolic employs symbolic templates to generate various mathematical questions, enabling more controlled evaluations that yield reliable metrics. Notably, findings reveal significant variance in LLM performance across different question instantiations, with models demonstrating decreased accuracy when numerical values are altered, highlighting their fragility in handling minor changes.

The study further uncovers that LLMs struggle with increased question complexity, as evidenced by a marked performance decline (up to 65%) when extraneous but seemingly relevant information is introduced. This suggests that these models rely more on probabilistic pattern-matching than logical reasoning. Consequently, the GSM-Symbolic benchmark enhances our understanding of LLMs’ capabilities and limitations in mathematical logic and raises concerns about the reliability of previously reported metrics in the GSM8K dataset. The research underscores the necessity for improved evaluation methodologies and prompts further investigation into the genuine reasoning capabilities of these models, especially in the context of complex problem-solving scenarios.

LLM Of The Week

Aria

In the rapidly evolving Vision-Language Models (VLMs) landscape, Aria has emerged as a notable contender with its state-of-the-art performance in multimodal tasks, particularly excelling in video and document understanding. Aria efficiently encodes diverse visual inputs with a mixture of expert architecture that activates 3.9 billion parameters per token, supporting a long context window of up to 64K tokens. In benchmark tests, Aria outshines competitors like Pixtral and Llama with 3.2, achieving impressive scores such as 54.9 on the MU for knowledge and 66.1 on MathVista, reflecting its solid mathematical reasoning and multimodal understanding capabilities.

As tech companies unleash a wave of new VLMs, the competition intensifies. In the past month, notable releases, including GPT-4o mini and Gemini-1.5 Flash, have aimed to capture market share by enhancing model performance across various tasks. However, Aria's rapid processing ability, capable of captioning a 256-frame video in just 10 seconds, alongside its robust benchmark results, positions it as a leader in this space. This dynamic environment highlights Aria's strengths and emphasizes the industry's drive toward advancing multimodal AI capabilities, paving the way for more sophisticated applications in real-world scenarios.

Best Prompt of the Week 🎨

A luxurious close-up of a traditional Indian sweet (gulab jamun) on an ornate golden plate with a golden spoon, warm and dramatic lighting. The background features a metallic vessel with a deep red, festive ambiance. The scene is accented with scattered petals and intricate details on the utensils, evoking a sense of opulence and tradition.

Today's Goal: Try new things 🧪

Acting as a Design Strategy Planner

Prompt: I want you to act as a design strategy planner. You will create a comprehensive daily plan to help a design agency develop an innovative design strategy and accompanying content calendar for the FIFA World Cup 2026. You will identify key visual and branding elements, create action steps for design implementation, and choose tools and resources for managing content creation. Additionally, you will outline a content calendar with timelines to ensure consistent and impactful communication throughout the campaign. My first suggestion request is: "I need help creating a design strategy and content calendar for a design agency working on the FIFA World Cup 2026."

This Week’s Must-Watch Gem 💎