Hello Tuners,

This week in GenAI, AWS re:Invent brings out a plethora of developer-friendly features for automation, operations, and inferences on AWS Bedrock and Sagemaker. On an instead “this sounds like a crypto scam” GenAI news, Nous Research trains their newest 15B parameter across oceans with distributed learning.

Fei-Fei Li’s World Labs unveiled the first of their World Models, and surely they are a thing to behold! Last week’s hero and this week’s discussion Qwen QwQ 32B under fire for Censoring Controversial History QnA.

AWS re:Invent has always been a treasure trove of innovation; this year is no exception. As someone with a special fondness for AWS, my first AI certification was in SageMaker; I can't help but marvel at how far this platform has come. Today's AWS is sleeker and more intuitive. Here’s a roundup of the most exciting announcements from this year’s event:

Automated Reasoning on Amazon Bedrock

Bedrock now ensures near-perfect accuracy with new reasoning checks that tackle AI hallucinations head-on, making it a game-changer for enterprises relying on dependable AI outputs.Prompt Caching and Intelligent Routing

AWS introduced Intelligent Prompt Routing to direct queries to the right-sized models and Prompt Caching to slash token generation costs by 90%, boosting efficiency and cutting latency for AI applications.SageMaker HyperPod Task Governance

HyperPod's latest feature optimizes GPU utilization, reducing AI infrastructure costs by up to 40% while prioritizing tasks smartly—perfect for scaling AI initiatives on a budget.Multi-Agent Orchestration on Bedrock

Bedrock now supports multi-agent workflows, enabling businesses to coordinate specialized AI agents for complex tasks, like Moody's improved analysis with orchestrated precision.Nova Generative AI Model Family

AWS launched the Nova models for creating text, images, and videos. Integrated with Bedrock, these models empower businesses to generate creative content at scale.

These updates show that AWS is doing everything possible to simplify AI workflows, reduce costs, and facilitate innovative solutions.

Nous Research is pre-training a 15-billion-parameter language model in a way that's never been done before. Instead of relying on costly AI data centers or power-hungry superclusters, they're utilizing a distributed approach with hardware spread across the globe. This decentralized method is powered by their open-source technology, Nous DisTrO (Distributed Training Over-the-Internet). It reduces the heavy bandwidth traditionally needed for training large models.

Check Out Live Stats: distro.nousresearch.com

The current pre-training run, live-streamed for public tracking on distro.nousresearch.com, demonstrates how a diverse, global network of machines can efficiently train models with a lower-cost infrastructure, potentially challenging the AI training status quo. DisTrO’s efficiency breakthrough is nothing short of revolutionary. It reduces inter-GPU communication requirements by up to 10,000 times, allowing for large-scale model training even on slower internet connections, such as those with 100Mbps download and 10Mbps upload speeds.

Fei-Fei Li, the renowned founder of ImageNet, the dataset that laid the foundation for most Convolutional Neural Network (CNN) architectures and propelled the journey of deep learning in computer vision, is back with a groundbreaking innovation. Her startup, World Labs, has unveiled an AI tool that turns 2D images into fully navigable 3D environments. This tool generates 3D worlds with persistent visual features and consistent geometry by leveraging a simple prompt or reference image. It lets creators control real-time camera movements and integrate generated scenes into their projects.

This new technology can transform industries such as film, video games, and simulation, streamlining the creation of complex 3D environments and consistent AI-generated backgrounds. Though the tool isn't available for general use, it promises to significantly impact content creation workflows, especially when combined with other AI video tools. Animation studios and developers are already experimenting with its ability to stage characters in varied scenes efficiently. Fei-Fei Li's work continues to push the boundaries of AI's creative potential, building on the legacy of ImageNet's role in shaping modern deep neural networks for vision.

China's open-source AI models have been making headlines for their impressive performance on various tasks like coding and reasoning. However, they have also faced criticism over their censorship practices, particularly from figures such as Hugging Face CEO Clement Delangue. Like those from Alibaba's Qwen family, models developed in China often censor sensitive topics. Delangue, speaking on an earlier podcast, expressed concerns about the potential impact of Chinese dominance in the AI field, noting that if China were to lead in AI, it could spread specific cultural values that might not align with those of the Western world, but now is talking about how he sees Chinese AI dominate 2025.

Qwen QwQ Inferencing

Despite these concerns, not all models emerging from China have these censorship issues and depend upon deployment conditions, prompts, and guidelines. This model is part of the broader trend of Chinese AI companies rapidly catching up to their Western counterparts, thanks to the open-source movement. Hugging Face, the world's largest platform for AI models, is at the heart of this shift, with models like Qwen2.5-72B-Instruct and Qwen Coder freely available, showcasing China’s competitive edge in the AI race. However, with models like QwQ-32B, which censor sensitive topics, the ongoing debate about open-source AI's ethical and political ramifications continues to grow. As soon we move towards AI like Gemini or Anthropic quickly becoming the search engine of choice, so does the narrative control from a diverse pool of resources to a pre-trained, guard-railed, and privately deployed LLM that serves just the will of the shareholders.

Weekly Research Spotlight 🔍

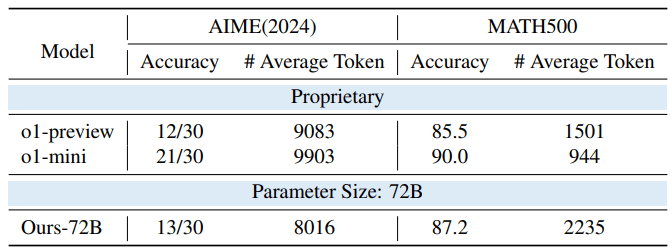

O1 Replication Journey

This paper critically examines current efforts to replicate OpenAI’s O1 model, focusing on knowledge distillation techniques. Building on previous work, the study shows that simple distillation from O1’s API and supervised fine-tuning can achieve superior performance in complex mathematical reasoning tasks. Through extensive experiments, the authors demonstrate that a base model fine-tuned on O1-distilled chains can outperform O1-preview on the American Invitational Mathematics Examination (AIME) while maintaining minimal technical complexity. The research extends beyond mathematics to explore the model’s generalization across tasks such as open-domain question answering and safety, revealing that even models trained on mathematical problem-solving data can excel in broader domains and reduce sycophantic behavior.

The paper offers a detailed technical breakdown of the distillation process and its effectiveness, along with a benchmark framework for evaluating O1 replication attempts based on transparency and reproducibility. The authors caution against over-relying on distillation methods and highlight the importance of developing researchers with a deep understanding of first-principles thinking. This "bitter lesson" underscores education's necessity to foster more grounded and thoughtful AI development, emphasizing that the future of AI innovation relies on a balance between capability and fundamental research principles.

LLM Of The Week

SmolVLM

SmolVLM is a compact, open-source multimodal model designed to process and generate text from arbitrary sequences of images and text. Developed by Hugging Face, SmolVLM excels at tasks like image captioning, visual question answering, and storytelling based on visual content. Its lightweight architecture is ideal for on-device applications, balancing efficiency and firm performance. SmolVLM is based on the Idefics3 architecture and utilizes SmolLM2, a language model optimized for multimodal tasks. With the ability to handle text and images in arbitrary sequences, it can answer questions about images, describe visual content, or generate text purely from language input.

The model introduces key innovations in efficiency, including radical image compression and the use of 81 visual tokens to encode image patches of size 384×384, enabling it to infer faster and consume less memory. While it doesn’t support image generation, its multimodal capabilities are robust for many applications. Hugging Face provides a fine-tuning tutorial for adapting SmolVLM to specific tasks, making it a versatile tool for developers needing a compact yet robust model. The model is available under the Apache 2.0 license, ensuring open access for further research and development.

Best Prompt of the Week 🎨

Create an intricate stained glass pendant featuring a reindeer, using a vibrant green and red color palette. The design should include shiny, metallic textures with clearly defined lines between the stained glass pieces, forming a mosaic effect. Each piece contributes to the llama's silhouette, with some allowing light to pass through for added depth. Incorporate mystical elements like runes and magical symbols, blending metallic and gemstone textures for an enchanting, stylish look. The combination of textures, shades, and light interplay creates a captivating, artistic centerpiece perfect for decorative display.

Today's Goal: Try new things 🧪

Acting as an Event Planning Strategist

Prompt: I want you to act as a content planning strategist. You will create a structured daily plan specifically designed to help individuals launch a design-focused podcast where they share insights, discuss their creative work, and take listeners through their design journey. You will identify key steps for content creation and storytelling, develop audience engagement and promotion strategies, select recording and editing tools, and outline additional activities to ensure consistent and impactful episodes. My first suggestion is: "I need help creating a daily activity plan for someone starting a design podcast to share their experiences and guide listeners through their design journey.

This Week’s Must-Watch Gem 💎