Hello Tuners,

Welcome to our newsletter. Let us start by highlighting the Stargate Project, a $500 billion initiative by OpenAI and SoftBank to build AI infrastructure in the U.S. This project aims to develop AGI to perform invaluable tasks beyond human capabilities. As construction begins in Texas, it represents America's commitment to AI leadership, though success hinges on addressing financial and strategic criticisms.

Additionally, Google released Gemini 2.0, a model setting new records in math and science. DeepSeek's open-source model cost-effectively challenges industry giants. Microsoft's AutoGen v0.4 offers a scalable framework for enterprise AI applications, marking a pivotal moment in AI evolution.

In a move that has stirred both excitement and skepticism, the most significant investment in AI infrastructure was announced with the launch of "The Stargate Project." This $500 billion venture, backed by OpenAI, SoftBank, Oracle, and MGX, aims to build new AI infrastructure in the U.S. and support national security. The ambitious project aims to develop artificial general intelligence (AGI) that surpasses human capabilities in economically valuable tasks.

Massive Investment: The Stargate Project represents a $500 billion commitment from major tech players like OpenAI and SoftBank to construct AI infrastructure in the U.S.

Strategic Goals: Aiming to achieve AGI, this initiative seeks to re-industrialize America while bolstering national security.

High-profile Backing: Leaders like Sam Altman and Masayoshi Son announced it alongside President Trump. It promises job creation and industry growth.

Criticism & Competition: Critics argue it is an overreach given rising open-source models like China's DeepSeek R-1, which match OpenAI's performance at lower costs.

As construction kicks off in Texas, the Stargate Project is a testament to America's commitment to leading global AI innovation. Yet its success hinges on navigating criticisms of financial feasibility and strategic direction. Whether this centralized approach will outpace decentralized alternatives remains a pivotal question for future technological supremacy.

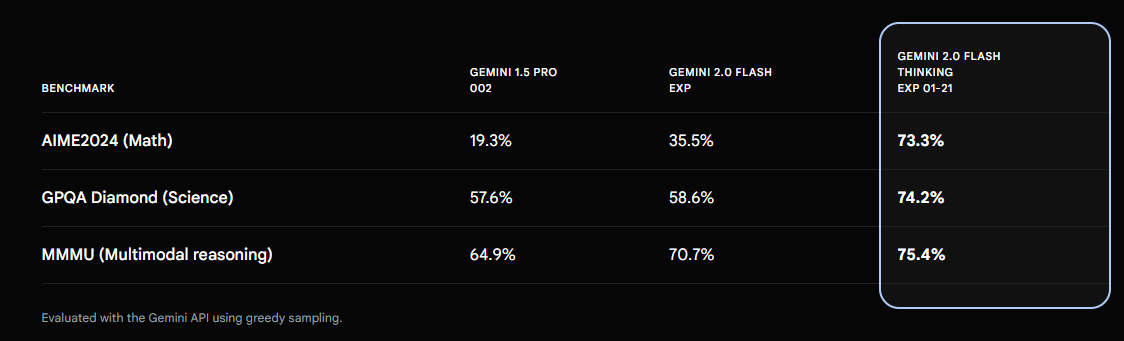

Google has quietly released a significant update to its AI model, Gemini 2.0 Flash Thinking. This model can now explain its reasoning process while setting new records in math and science tasks. With a score of 73.3% on the AIME and 74.2% on the GPQA Diamond benchmark, this free alternative to OpenAI's premium services is turning heads.

The standout feature? It processes up to one million tokens—five times more than OpenAI's o1 Pro model—without breaking a sweat! This expanded context window allows it to juggle multiple research papers or datasets simultaneously, potentially revolutionizing how researchers handle large volumes of information. Google's offering is free during beta testing, making it an enticing option for developers seeking cost-effective AI solutions.

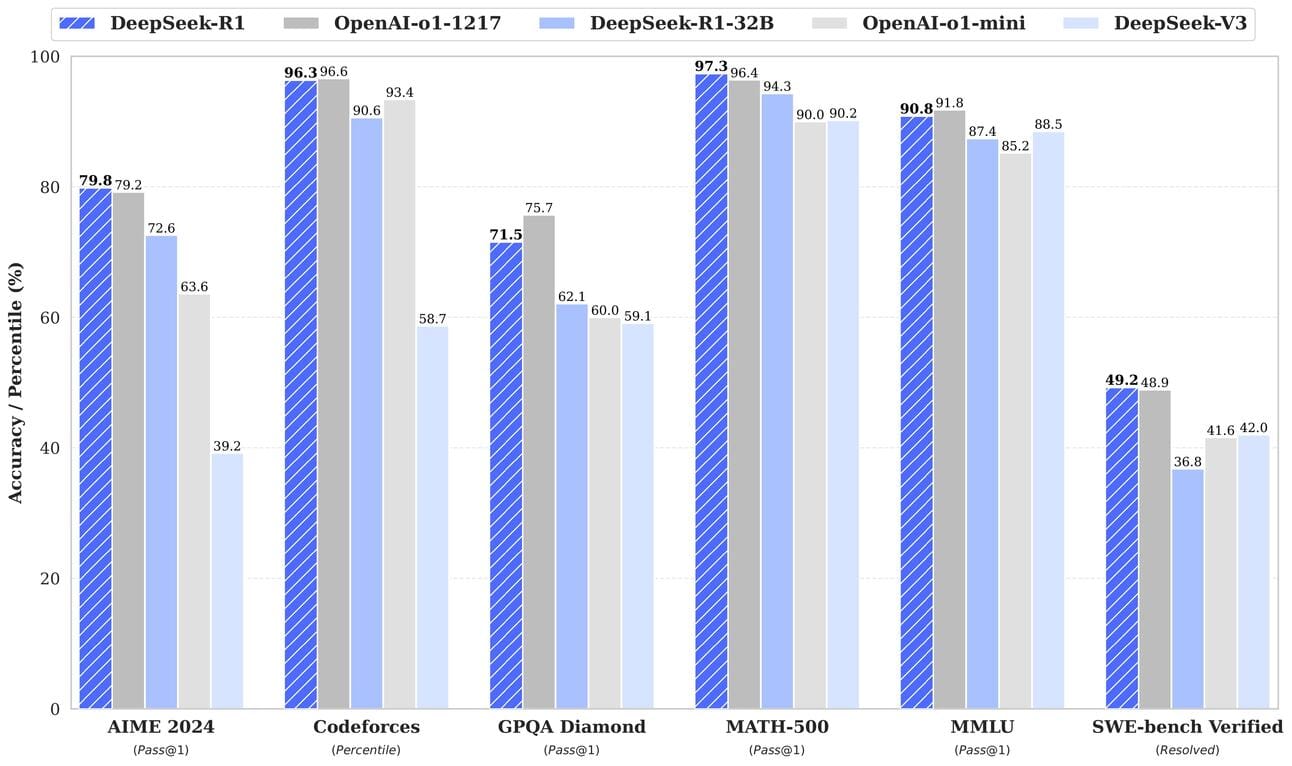

In a bold move shaking up the AI landscape, Chinese startup DeepSeek has unveiled its latest open-source reasoning model, DeepSeek-R1. This new model challenges industry giants by matching the performance of OpenAI's o1 in math, coding, and reasoning tasks but at a fraction of the cost. By leveraging their DeepSeek V3 mixture-of-experts framework, they've delivered high-caliber results while maintaining affordability — a whopping 90-95% cheaper than OpenAI's offerings.

DeepSeek-R1 not only narrows the gap between open-source and commercial models but also pushes boundaries with its ability to distill other models, such as Llama and Qwen, into more efficient versions. These distilled models have outperformed larger counterparts, such as GPT-4o, in specific benchmarks. Available on Hugging Face under an MIT license, DeepSeek-R1 is accessible for developers eager to explore cutting-edge AI without breaking the bank. As this new contender enters the arena, it highlights an ongoing shift towards democratized AI development that could redefine future technological advancements.

Microsoft's release of AutoGen v0.4 marks a pivotal moment in the evolution of AI agents. This robust and scalable framework offers a way to build multi-agent systems tailored to enterprise applications. With its asynchronous, event-driven architecture, AutoGen allows agents to perform tasks concurrently, enhancing efficiency and resource utilization. This update positions Microsoft as a strong contender against frameworks like LangChain and CrewAI by seamlessly integrating with Azure and catering to enterprise needs.

AutoGen's tight integration with Microsoft's ecosystem provides developers with flexibility and scalability, making it an attractive option for enterprises embedded in Azure. While LangChain focuses on developer-centric tools and CrewAI emphasizes user-friendly interfaces, AutoGen distinguishes itself through extensibility and enterprise readiness. Despite the excitement of these advancements, many organizations remain cautious due to data infrastructure challenges. As AI agent frameworks continue to evolve, balancing technical sophistication with usability will be crucial for widespread adoption in the enterprise landscape.

Weekly Research Spotlight 🔍

Self-adaptive LLMs

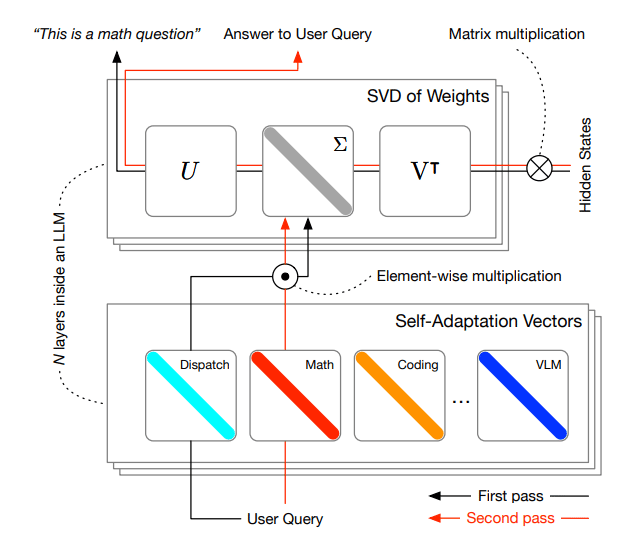

The introduction of Transformer2 marks a significant advancement in the realm of self-adaptive large language models (LLMs), addressing the limitations of traditional fine-tuning methods, which are often resource-heavy and inflexible. This innovative framework adapts LLMs to new tasks in real time by selectively modifying only specific components of their weight matrices. During inference, Transformer2 utilizes a two-pass system. Initially, it identifies task properties through a dispatch mechanism, followed by dynamically mixing task-specific "expert" vectors trained via reinforcement learning to tailor responses to the incoming prompt.

Transformer2 surpasses prevalent methods like LoRA by using fewer parameters and achieving greater efficiency. Its versatility extends across various LLM architectures and modalities, including vision-language tasks, making it a scalable solution for enhancing adaptability and task-specific performance. By offering a dynamic, self-organizing approach, Transformer2 paves the way for more responsive AI systems capable of efficiently handling diverse and unforeseen challenges. This development represents a leap forward in creating AI that can autonomously adapt to an ever-expanding array of tasks without extensive retraining.

LLM Of The Week

MiniMax-01

The MiniMax-01 series, featuring MiniMax-Text-01 and MiniMax-VL-01, introduces a groundbreaking approach to AI modeling by offering superior capabilities in processing extended contexts. Utilizing "lightning attention" and integrating with Mixture of Experts (MoE), these models boast 32 experts and a total of 456 billion parameters, with 45.9 billion activated per token. This innovative design allows efficient training and inference across contexts spanning millions of tokens, making it comparable to top-tier models like GPT-4o while providing significantly longer context windows.

MiniMax-Text-01 can handle up to 1 million tokens during training and extrapolate to 4 million tokens during inference at an affordable cost. Meanwhile, MiniMax-VL-01 is enhanced through continued training on 512 billion vision-language tokens. Experiments indicate that these models match the performance of state-of-the-art counterparts while offering a context window that's 20 to 32 times longer. By publicly releasing the MiniMax-01 series, the developers aim to push the boundaries of what's possible in large-scale AI applications, making advanced capabilities accessible for broader use cases.

Best Prompt of the Week 🎨

A surreal illustration of a faceless humanoid figure in a gradient of pink, orange, purple, blue, and teal, set against a tranquil background. The head features vibrant, dreamlike floral elements and abstract shapes, including a fish and glowing orbs, emanating a sense of calm and imagination. The figure's eyes are minimalist and glowing white, and a single pastel flower covers the mouth. Ethereal lighting and soft textures create a dreamlike and futuristic atmosphere. Style: abstract and contemporary, with smooth gradients and fine details.

Today's Goal: Try new things 🧪

Acting as a Productivity Coach

Prompt: I want you to act as a productivity coach for individuals with ADHD. You will create a structured, yet flexible, daily plan specifically designed to help someone who is trying hard to accomplish their tasks but feels overwhelmed by their to-do list. You will identify strategies to break tasks into manageable steps, prioritize effectively, and build routines that reduce decision fatigue. Additionally, you will outline techniques for staying motivated, managing distractions, and incorporating short breaks to maintain focus. My first suggestion request is: "I need help creating a daily activity plan for someone with ADHD who wants to implement their tasks effectively without feeling overwhelmed."

This Week’s Must-Watch Gem 💎